Here, I sum up an interesting paper concerning a content-based ranking of blogs.

A blog is relevant if it focuses on a central topic.

This is called topical consistency.

The authors introduce the

coherence score to measure the consistency.

It is based on the intra blog

clustering structure relative to the clustering of the background collection.

One has to differentiate between short and long term

interest in blogs.

Further, the key features of blogs are a

strong social aspect and their inherent

noisiness.

|

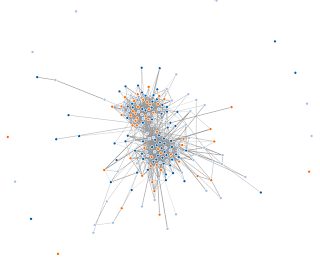

| Forces Layout of blogs interlinkage using D3 |

The topical noise springs from random interest blogs or diaries. This creates topical diffuseness ( a loose clustering).

One has to find the blogger that is most closely associated with a specific topic.

Blogs mostly fail to maintain a central topical thrust. Nevertheless, the trend goes to rank full blogs to recommend the reader interesting feeds.

One has to take the time and the relevance of topics into account.

Thereby,

recurring interest (time based) and

focused interest (cohesiveness of language of posts) should get measured.

The authors' coherence score captures the topical focus and tightness of subtopics in each blog. Thus, it handles the focused interest.

Lexical cohesion is an alternative to the coherence score. It measures the semantic relation hips between content words.

Therfore, external thesauri like WordNet are used to build

lexical chains. The number of chains reflect the number of distinct topics. A so called

chain score is used to measure the significance of a lexical chain.

The lexical cohesion is sensitive to progression of topics, but blind to their hierarchical structure.

The coherence score gives the proportion of

coherent document pairs relative to the background collection.

These pairs are calculated by thresholding the

cosine similiarty of documents.

The score measures the relative tightness of the clustering for a blog and prefers structured document sets with fewer sub-clusters.

Thus, the coherence score captures the

clustering structure of data, called topical consistency.

It is independent of external resources and adapts to the fast changing environment of blogs.

Its complexity is O(average document length * number of documents ^2) and it can be used

beyond text data (eg. blog structure or linkage).

It gets integrated into a blog ranking for

boosting the topical relevant and topical consistent blogs.

Jiyin He, Wouter Weerkamp, Martha Larson, Maarten de Rijke: An effective coherence measure to determine topical consistency in user-generated content. IJDAR 12(3): 185-203 (2009)